Research Question

This article explores the use of logistic-shaped diffusion curves (S-Curves) to predict the accumulation of atmospheric Atmospheric is measured at a number of stations globally, the longest continuous series is from Mauna Loa, and the data series, the Keeling Curve, (Keeling et al. 2001) has been made famous in, among other places, An Inconvenient Truth (Gore 2006). in the atmosphere results from a variety of causes, but transport is considered one of the primary sources, amounting to about 24% of total emissions annually (Solaymani 2019).

As a well-known data series, the Keeling Curve has been used to demonstrate the relatively steady increase of atmospheric which has separately been correlated with the rise of global temperature.

Logistic Curves have been used for historic analysis, especially in the domain of understanding technology deployment, particularly the deployment of transport networks, and are used prospectively for forecasting (De Tarde 1890; Rogers 1995; Marchetti 1980; Batten and Johansson 1985; Nakicenovic 1988; Garrison 1989; Grubler 1990; Garrison and Souleyrette 1996; Levinson and Krizek 2017; Dediu 2018). Despite, for instance, greater car use, cleaner engines, growing population, implementation of carbon policies, we ask if the curves of accumulation are stable – in other words, are those changes small or offsetting, and have trends that are already embedded in the function? While the level of in the atmosphere is the result of countless microscopic individual decisions and actions, along with random environmental factors, perhaps the resulting aggregate trends produce a predictable macroscopic pattern.

The broader research question here is whether forecasts using logistic curves are stable as suggested by historic analyses of other systems, that is, do they predict consistently over time with different amounts of data? To the extent they are stable, we suppose they are more reliable for forecasting.

Methodology

S-Curves use the following equation

StSmax−St=eb⋅t+c(1)

or

ln(StSmax−St)=b⋅t+c(2)

Where:

= system status accumulation) at time .

= maximum system status (ultimate accumulation in the atmosphere).

= time (year). (Data are reported in months, denoted as decimal years)

= model parameters

The objective is to solve for and to best explain the relationship.

To apply the model, it is helpful to estimate the midpoint or the inflection year It turns out that:

ti=c−b(3)

We can then estimate the system size (in this case accumulation) in any given year using the following equation:

ˆSt=Smax1+e(−b(t−ti))(4)

In back-casting, explaining the deployment of extant systems, is apparent. We aim to identify the final systems status for a system whose deployment is ongoing. While we may know the current and historic system size use of an S-curve requires knowing how large the system will be.

The method here solves for and which maximize goodness of fit for the equation, measured as the We use ordinary least squares regression to solve for and for a given and find the best using a generalized reduced gradient solver aiming to maximize by adjusting

The monthly average atmospheric concentrations in parts per million (ppm) are derived from in situ air measurement at Mauna Loa, Observatory, Hawaii: Latitude N, Longitude W, Elevation 3397m, as recorded by the Scripps Institution of Oceanography. The raw data, interpolated to complete missing observations, was used.

Starting with a pre-industrial baseline level of 280 ppm (Etheridge et al. 1998), we find the parameter estimates for the best fit logistic curve for the Keeling Curve. We do this at 7 different points of time (1960, 1970, 1980, 1990, 2000, 2010, 2020) using the data available at those points in time. So for 1960, we use data from 1958-1959, for 1970, data from 1958-1969, and so on, until 2020, which uses all the available data through the end of 2019.

Findings

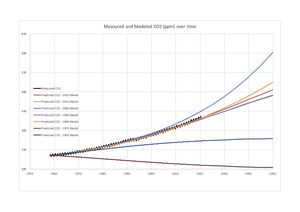

Table 1 shows the parameter values for each model, and Figure 1 shows those values graphed, along with the observed data. As can be seen from the figure, the models give a wide variation in results. While all models since 1980 are reasonably good fits and reproduce the observed data they are trying to replicate, and the 2020 model has a very good fit they produce very different outcomes. The growth of is not steady, and some decades have more change than others. The use of early forecasts to estimate maximum system states is precarious.

The 1960 model, using slightly less than 2 years of data (22 months), does not predict an increase in at all, and instead takes the best-fit value of (330 ppm) and sees that the maximum has already been reached, and the accumulation of is in decline.

The 1970 model forecasts a small increase to 362 ppm (from a 1970 level of 325 ppm). The relatively low levels of resulting from extrapolation of 1950s-1960s data contrast sharply with the following decades, suggesting a faster rate of increase of (a positive second derivative) in the subsequent two decades (as seen in the final row of Table 1).

The level of started increasing at a faster rate in the 1970s and 1980s, so the 1980 model found a best-fit at =962 ppm. The late 1980s is when concern about the issue began to become mainstream.

The 1990 model does not actually have a finite saturation level, and instead fits an exponential pattern with no saturation level, the result of steady increases in the rate of atmospheric accumulation. But even small differences in early years extrapolate to large (and potentially infinite) differences in later years with this model form. The following years all converge on a best-fit value of as the rate of increase slowed.

Models for 2000 and 2010 are very close, with of 580 ppm and 572 ppm respectively. This suggests that after 40 and 50 years, the results begin to stabilize.

The result using the most complete model (2020) is a saturation level of 649 ppm, and implies the increase in won’t begin to slow until 2042 This growth trajectory is well in excess of levels required to keep the global average temperature under a Celsius rise from the 1950s baseline, (that fast approaching level is estimated at 450 ppm) and may be sufficient to melt Arctic and Antarctic ice (Fischetti 2011). For comparison, the ‘worst case’ Representative Concentration Pathways (RCP) scenario RCP8.5 (8.5 of radiative forcing) has a year 2100 level of of over 900 ppm, while the more optimistic RCP2.5 has a 400 ppm concentration, down from a mid-century peak (Van Vuuren et al. 2011).

The overall stability of the models can be considered by examining Table 2. For each data year, we compare all of the models. In almost all cases, the model estimated for a given year has the lowest root mean square error (standard deviation of the residuals) for a given data year, as shown by the bold numbers on the diagonal, which is not surprising. The only exception is the 2010 model has slightly lower RMSE than the 2000 model for year 2000 data. The data-limited earlier year models perform poorly in predicting later years, while the data-rich later year models do reasonably well (though obviously not as well as the earlier year model) in predicting on a limited set of earlier year data. The other notable observation is the 1990 model, which did not converge on an and so functions as an exponential model, does especially poorly in predicting later years, as it overestimates the accumulation of is not the only goodness of fit measure, and the models could be optimized on a difference performance indicator, but it seems to reasonably reproduce the data when the models converge, and there are only small overall differences in RMSE in the converged models for the later years (models estimated for 1980, 2000, 2010, and 2020) over the span of study period, though clearly they have quite different implications.

While there is uncertainty about its absolute magnitude, in the absence of a policy, economic, technological, geologic, environmental, or other shock, forecasts from the past four decades are in broad agreement of the trajectory of the problem.

Future research can test the general question of logistic curve stability with additional types of data, including network extent, vehicle kilometers traveled (VKT), and car ownership. Some of these (such as percent of people who own cars), have a natural upper limit e.g. an of 100%, other types of data are continuous and while there might be a physical maximum, it is not naturally derived, and instead depends on conditions, like VKT.